Tokenize

Sensitive Data

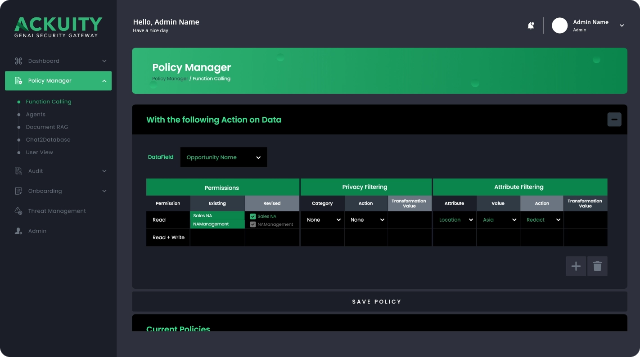

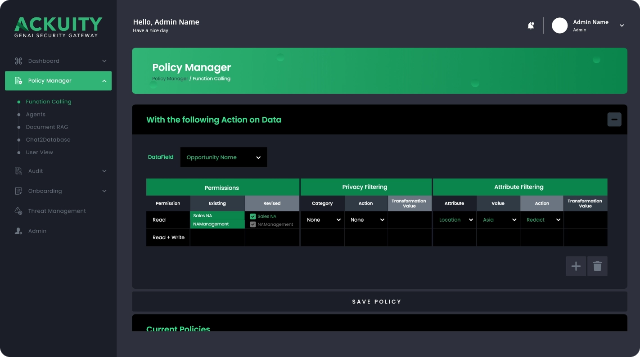

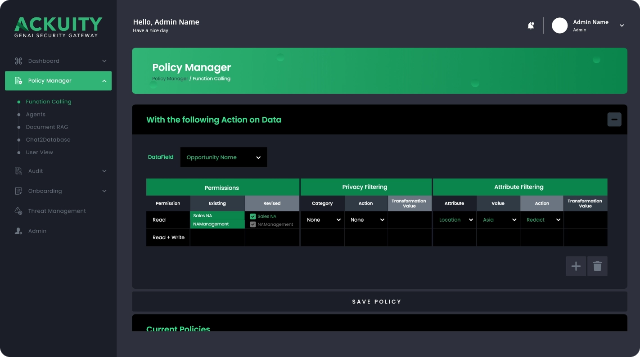

Connecting agents to APIs or database tables requires configuration of backend systems as well as Agents. Multiple agents can connect to same backend systems leading complex mesh of connections. Ensuring security of each connection, exchanging keys and setting up credentials, managing protocols and limiting fields that can be used, all these take up time.

Also, any error in these configurations can lead to data breach and over-exposure. Ackuity abstracts away these complexity and provides a simple and uniform front end for all agents to connect and interact securely.

Function calling and agents

Ackuity can act as the tool executing APIs on SaaS and other enterprise business applications, and tokenize the return value before sharing with public LLMs. The GenAI application can then use Ackuity’s APIs to retrieve the original data from tokens before showing the answer to enterprise users — creating a seamless experience for users while eliminating the risk of data exposure.

Vector DB and documents

During RAG, document vectors related to the query are retrieved and sent to the LLM for response. The extracted data from the vector DB may contain PII and other sensitive data. Ackuity can de-vectorize extracted data sent to LLMs, tokenize sensitive information (while retaining semantics), and re-vectorize it before inference. Ackuity can also detokenize the data in the response received from public LLM making it a seamless experience for the user.

Chat2Database

Ackuity can tokenize sensitive information from the SQL queries LLMs generate to retrieve data from data stores. This prevents exposure of PII and other business sensitive information like SSNs, names, gender, addresses, and the like that must be masked. Ackuity can also detokenize this data when the GenAI application returns the full answer back to the user.